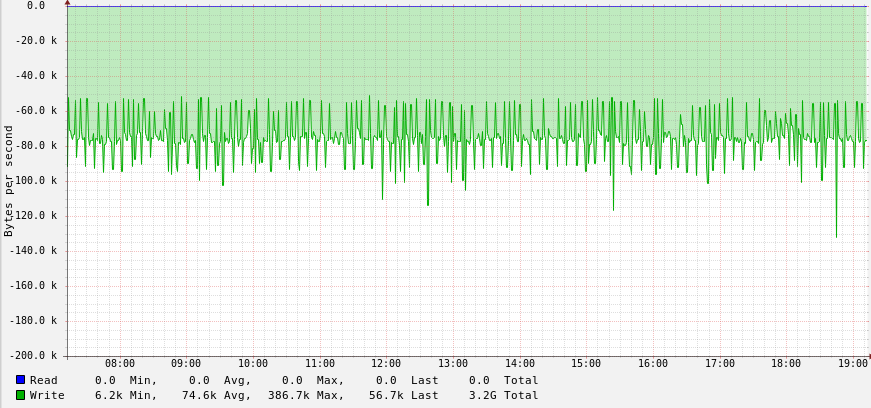

On a system which has de-facto nothing to do (a router basically), I observed constant disk writes with collectd:

As you can see, in 12h alone there was a total write of 3.2GB to the disk.

Using lsof, I could identify all opened files in write mode:

diff <(lsof | awk 'NR==1 || $4~/[0-9]+[uw]/ && $5~/REG/ && $6~/8,1/') <(sleep 30; lsof | awk 'NR==1 || $4~/[0-9]+[uw]/ && $5~/REG/ && $6~/8,1/')

I know that my only physical disk in the system is 8.1, regular files are

denoted by REG and write condition is either a w or a u.

The SIZE/OFF column gives you some feeling of how many bytes are written in

the last 30 seconds.

The output looks like this

< rsyslogd 509 root 6w REG 8,1 3936622 785024 /var/log/syslog

---

> rsyslogd 509 root 6w REG 8,1 3937650 785024 /var/log/syslog

8c8

< rsyslogd 509 root 12w REG 8,1 1636869 786638 /var/log/daemon.log

---

> rsyslogd 509 root 12w REG 8,1 1637897 786638 /var/log/daemon.log

clearly something was logging to the logs constantly. After watching the logs for a moment I could identify the problem and resolve it.

But I still could not identify the real cause. The log messages had some influence but where not the root cause.

Here comes iotop to the rescue.

Start with

iotop -a -o

to see only active processes and accumulate. Let this run some minutes.

I could find a process which would write files every so often, but would not keep them open. Thus they would fall through the search with lsof!